By incorporating AI-native design principles, wireless engineers can develop systems and networks that meet today’s needs and are equipped to evolve with tomorrow’s wireless requirements and advancements.

Next-generation wireless systems, such as base stations, cellular phones, and Wi-Fi modems, push complexity limits with ever-increasing capacity demands, greater coverage expectations, and a massive user surge. Enter AI-native technologies — designed to tackle these challenges by continuously learning, adapting, and optimizing in real time. Designing complex AI-native wireless systems for 5G, 5G Advanced, and 6G demands that wireless engineers master advanced modeling and simulation skills essential in modern signal processing.

Wireless engineers who rely on Design Space Exploration (DSE) to predict and test the behavior of their wireless systems before deployment need fluency with more advanced signal processing skills. Consequently, the multivariable, user-dense nature of 6G and beyond wireless systems is too complex for traditional methods. AI-native systems tackle this complexity by directly deriving models from data and continuously updating them with real-world inputs.

AI-native wireless systems integrate AI algorithms directly into their core, which can improve coverage, increase capacity, and bring robust reliability to the variety of wireless systems depicted in Figure 1. Unlike traditional systems with rigid, predefined models, AI-native designs learn and adapt to their environment, offering greater scalability while reducing the need for costly, time-consuming signal processing.

Figure 1. AI-native technologies enhance a wide range of systems and networks, from low-range personal area networks to long-range global area networks.

Design and integration

The shift from traditional to AI-native systems is transforming the foundational signal-processing knowledge wireless engineers previously acquired. While signal processing itself isn’t new, 5G and 6G demand more sophisticated applications involving data integration, computational efficiency, and power optimization. This evolution requires wireless engineers to develop new skills. For instance, integrating AI-native machine learning techniques now involves pattern recognition, anomaly detection, and predictive analytics for proactive network management.

Previously, wireless networks primarily supported human-to-human communication, resulting in simple architectures and few devices. Today, engineers must design intelligent systems capable of enabling machine-to-machine communication, incorporating components that include sensors and actuators to achieve real-time computation.

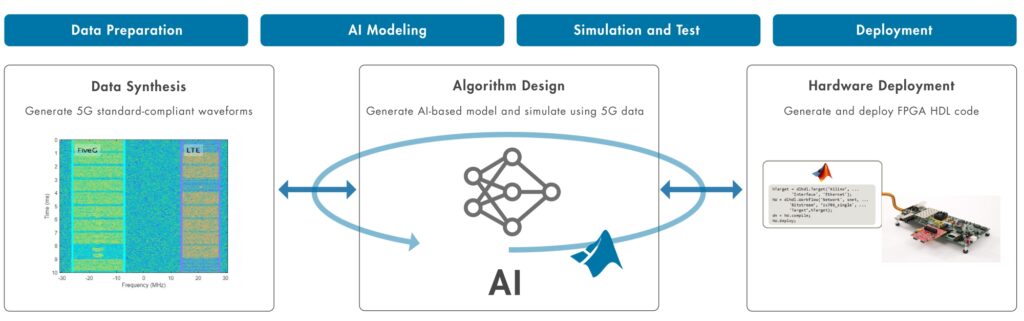

To help wireless engineers with skill building, a well-designed workflow can break down a complex system into more manageable steps while supporting a predictable framework. The design workflow shown in Figure 2 should include:

- Gathering and generating data for the AI-Native model

- Training and testing the AI-native model

- Implementing and integrating the AI-native model

Figure 2. An AI-native design workflow includes steps gathering data, training, and testing the model, as well as implementing and integrating it into wireless systems.

Gathering and generating data for the AI-native model

AI-native systems are only helpful when trained on a large quantity of representative data. The first step in creating an AI-native wireless system involves gathering and generating data. There are three methods of generating data:

- Acquiring real-world over-the-air (OTA) signals using hardware.

- Synthesizing data using a virtual representation of the system, known as a digital twin.

- Using statistical models to generate synthetic data that best represents the real world

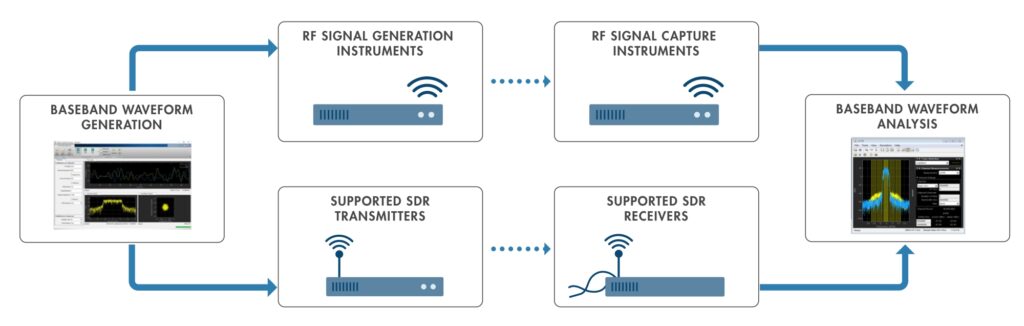

Real-world data is the most reliable for training a model but can be difficult to obtain. As depicted in Figure 3, acquiring this type of data requires physical hardware and the data must be captured at the right time, using spectra and frequencies. Engineers must also consider whether the hardware has sufficient power and storage space and whether the project has the bandwidth to capture the required amount of data.

Figure 3. Acquiring real-world data for AI training involves waveform generation — transmitting waveforms over-the-air using RF instruments or software-defined radios (SDR), capturing them from RF back to baseband using RF capture instruments or SDRs, and finally analyzing and adding them to AI training or testing data sets.

Alternatively, many engineers use digital twins — representative virtual models — to augment data sets and train AI-native systems. Digital twins ensure that AI-native systems have sufficient data to handle adverse situations and efficiently manage system elements. They produce reliable signals that closely match and can be generated with less difficulty than OTA signals. Because these signals are computer-generated, engineers have more control over them, and they solve many of the challenges presented by OTA data gathering. However, capturing the complexity and accuracy needed to represent real-world data can be demanding when using digital twins. Many parameters and provisions must be built into the digital twin to ensure it is a reliable replacement for the physical system.

In the absence of a sufficient amount of data, engineers must augment the data set by using synthesis algorithms. This data type is the engineer’s best estimate because statistical models and algorithms capture the most likely scenarios. Generating synthetic data requires meticulous effort because it must be manually crafted, with algorithms and parameters that accurately reflect real-world data while including anomalous cases. Engineers must use data that accurately mirrors the real world to train AI models and create advanced algorithms that synthesize less common real-world scenarios to complete the design space.

Training and testing the AI-native model

Engineers use the data collected to train a representative model. Each model is defined by a set of parameters, which are updated and optimized during training. Many parameters are involved in adequately representing a complex system, including bandwidth allocation, latency, signal strength, modulation, and coding. Engineers apply machine learning algorithms to optimize essential system functions using these parameters and the comprehensive dataset obtained in the previous step. They must ensure the training process is efficient and rapid, considering factors such as data throughput that will impact real-time performance.

After training, an AI-native model needs validation to ensure reliable performance in real-world systems, as shown in Figure 4. Validation aims to evaluate the model’s applicability beyond training data and performance under various real-world conditions. Engineers perform validation by running the model within the real-world system, ensuring system-level metrics remain within acceptable ranges. They also compare the model’s predictions with actual outcomes to identify discrepancies and address potential weaknesses. The model is then validated to ensure that the AI-native system operates reliably and efficiently in real-world applications.

Figure 4. Steps involved in training and testing/validating AI models: First, use pre-labeled synthesized or over-the-air data to train the AI model. Second, use the same trained model to test and validate the pre-trained model with new data it has not trained on.

Once validation is complete, the AI-native network is pruned by converting the model to a fixed point and removing neural network layers that do not contribute to the system’s overall behavior. The model is then ready for implementation and final integration within the wireless system.

Implementing and integrating the AI-native model

An AI-native model is only useful when implemented as part of a real-world system. Implemented AI components must be efficient in power usage, memory requirements, and computational complexity; otherwise, the system cannot respond to changing overall conditions in real time.

The first step in implementing an AI-native component is scaling and resource assessment. During this phase, engineers evaluate the processing power and memory requirements for the AI-native models to operate efficiently. Assessing these resources enables engineers to generate code that can run in real time, ensuring optimal performance and reliability of the overall system.

Next, wireless engineers use either manual or automatic code generation to deploy pre-trained AI-native models on desktop or embedded targets using low-level code. Automating code generation can simplify the deployment process by eliminating the need for manual coding, which can be time-consuming and prone to errors. This step prepares the AI-native model for effective, efficient integration into the real-world system.

With automatic code generation comes the loss of customization. Given this, wireless engineers can use automatic code generation at the beginning of the design process. By narrowing down the most promising design parameters early in the process, engineers can focus their resources on refining the designs with manual coding, accelerating development cycles, and reducing time-to-market.

The generated code is now ready to be integrated into the overall wireless system. It is designed to ensure the newly implemented AI-native components work with the rest of the legacy system. Engineers merge the new AI-native components with existing infrastructure during the integration phase. They also input the same system data used during the testing phase into the system hardware to verify that the AI-native solution performs accurately under real-world conditions.

At this point, engineers analyze the overall system performance to ensure the integrated system works as intended. They must confirm that the implemented and integrated hardware satisfies the overall metrics. Also, the AI-native components must enhance the legacy system without introducing new inefficiencies.

AI-native design challenges

Integrating AI-native into wireless systems presents various hurdles, including balancing conflicting performance metrics and ensuring superior performance relative to legacy systems. The goal is to achieve a balance that supports operational objectives by delivering high-quality overall performance.

Within AI-native systems, balancing performance metrics is a complex endeavor for wireless engineers because these systems often need to simultaneously optimize multiple, sometimes conflicting, objectives. For example, wireless systems have conflicting goals whereby increasing one metric capacity might degrade latency or energy efficiency, necessitating a tradeoff. AI-native systems must find a balance between various metrics, such as speed, coverage, robustness, and energy consumption, which can be difficult to achieve simultaneously. Wireless engineers can explore various scenarios and configurations using thought modeling and simulation to balance the desired metrics without disrupting the system.

Wireless engineers also struggle with complex interactions in dynamic environments. AI-native systems operate in real-world conditions that constantly change, such as network congestion, signal interference, or varying user demands. This dynamic nature makes it hard to maintain consistent performance across all metrics without sacrificing one another. In parallel to this, many performance metrics in AI-native systems are interdependent. Improving one might inadvertently affect others, making the optimization process highly complex. AI algorithms need to account for these intricate relationships, often requiring advanced strategies like multi-objective optimization.

AI models depend on data for training and optimization. In 5G or 6G networks, the complex and constantly changing environment with its real-world data makes it harder for AI systems to generalize and maintain a balance across performance metrics under various conditions.

Modeling and simulation are crucial in addressing conflicting goals, dynamic environments, interdependent performance metrics, and data variability. Modeling and simulation offer a lifeline for wireless engineers, letting them explore digitally various scenarios and configurations to balance the desired metrics without disrupting the system. This, in turn, allows wireless engineers to adjust, verify, and validate the scenario before deploying it onto the system.

Ensuring superior performance

For superior performance, it is essential to transition from legacy wireless systems to AI-native systems without disruption. AI-native models that continuously learn are critical to this transition, enabling the system to adapt to dynamic network conditions. Achieving superior performance requires training models using diverse, representative datasets.

One solution is to simulate the integrated system before full-scale deployment to ensure AI-native components interoperate properly with legacy systems. Engineers use simulation software to facilitate interoperability testing and identify potential compatibility issues and performance bottlenecks.

To optimize the performance of AI-native wireless systems, engineers must establish efficient communication between a user’s device and the network. The device must relay Channel State Information (CSI) to the network, including channel conditions and critical device information like location and data needs. Engineers must balance how much CSI data is transmitted to fulfill user needs. Transmitting excessive CSI can consume valuable network bandwidth. AI-native systems can intelligently compress this information, ensuring minimal data is sent while maximizing the resources available to the user’s device.

The wireless industry is at a critical juncture. With the upcoming rollout of 5G Advanced and 6G standards, next-generation wireless systems will deploy more AI-native technologies. Engineers designing modern wireless systems have realized that integrating AI-native is vital to their success.