Today’s SoC designers need to design PCIe 7.0 into new AI chip designs. First-pass silicon success is critical to meet the increasing performance and bandwidth demands of data-intensive applications.

Data center technologies need to evolve to enable AI’s increasing workload and demands, especially as the number of parameters doubles every four to six months — 4X faster than Moore’s Law (Meta, 2023). Current AI models have trillions of parameters, pushing existing infrastructures to the limit. As a result, more capacity, greater resources, and faster interconnects are required.

According to Synergy Research Group, the global capacity of hyperscale data centers will more than double in size in the next six years to meet the needs of generative AI. To keep up with this growing demand, the data center ecosystem relies on standards such as Peripheral Component Interconnect Express (PCIe), Compute Express Link (CXL), Ethernet, and High-Bandwidth Memory (HBM) to provide the framework for the performance, capacity, bandwidth, and low latency required to transfer data throughout the system.

For data center chip designs to succeed, fast and efficient interconnects and interfaces are critical. Designers require faster performance with zero latency; the ability to transfer massive amounts of data; and access to advanced interface IP that not only provides bandwidth and power efficiency but also maintains interoperability with the complex and evolving ecosystem.

To meet these requirements, data center interconnects need to support PCIe 7.0, the latest specification of this critical standard. While the standard is not yet ratified, it’s important to integrate IP that supports PCIe 7.0 into a silicon roadmap now, especially when considering today’s chips take a year or more to produce. Figure 1 highlights how PCIe 7.0 is key to interconnect providers and could power every interconnect in the AI/ML fabric.

Figure 1. PCIe 7.0 offers the bandwidth and load-store capabilities needed to connect multiple accelerators, enabling them to process large, complex AI models effectively.

The next leap in performance

PCIe 7.0 is poised to deliver the bandwidth needed to enable the scaling of interconnects for hyperscale data centers. By providing fast and secure data transfers with up to 512 GB/s of bandwidth, PCIe 7.0 essentially future-proofs data center bandwidth to mitigate data bottlenecks.

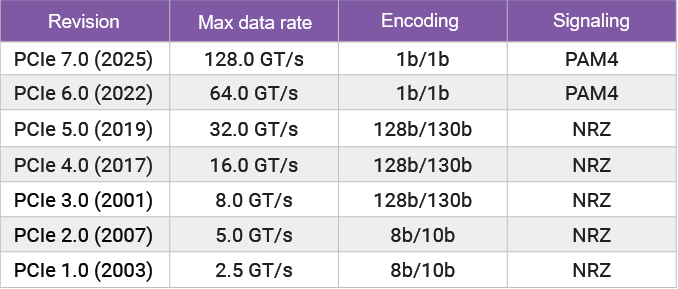

Enabled by interface IP, high-speed interfaces, such as those found on processors, accelerators, switches and more, can move data between CPUs and accelerators and through the entire compute fabric including retimers, memories, switches, network interface cards, and more. Compared to PCIe 6.0, PCIe 7.0 increases the number of lanes supported and doubles the bandwidth. With improved signaling rates, PCIe 7.0 also reduces latency, vital for real-time processing and responsiveness in AI algorithms and high-speed data processing in high performance computing (HPC). Of course, PCIe 7.0 also maintains backward compatibility with previous PCIe generations, ensuring interoperability with existing hardware while offering scalability for future upgrades. Table 1 highlights how PCIe has changed through its generations.

Maintaining critical interoperability

The beauty of interoperability (and mature standards such as PCIe) is that it enables a range of vendors in a diverse ecosystem to collaborate, ensuring that their respective components/systems will operate reliably with one another. When designing the fastest chips in the world, it’s imperative to ensure seamless operation over an extended period. When all pieces are interoperable, there’s no excessive downtime or other performance issues to worry about.

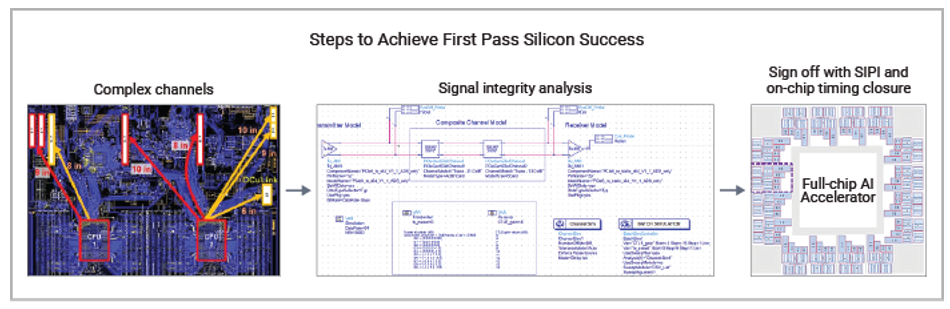

Before designing a system and even before choosing any IP, designers should go through an exhaustive evaluation process. With PCIe, there are many variants, lanes, media, form factors, and reaches to consider. For example, these designs generally need many high-speed lanes. Multiple lanes of PCIe switching simultaneously draws a huge amount of power, making power integrity a concern. If issues such as IR-drop occur during simultaneous switching, this inhibits full performance. Signal integrity analysis (Figure 2) is important as well, as the signal transmitted between the AI accelerator and the CPU in a system must be intact. This in turn would make power and signal integrity expertise critical for engineers to understand how to achieve optimal performance.

Figure 2. PCIe’s high-speed digital signals require intense signal-integrity analysis to reach sign-off of first-pass silicon.

Why PCIe 7.0 today?

Tomorrow’s AI clusters with accelerators, switches, network interface cards, and more must be able to be deployed at the same time to enable data-intensive operations and mitigate data bottlenecks. Early access to IP that supports PCIe 7.0 ahead of the standard’s ratification is mission-critical for companies to start their next HPC and AI chip designs early and with the confidence that when those chips are deployed, they will deliver the bandwidth and performance required for the world’s fastest chips.