5G moved from FDD to TDD to improve spectrum efficiency. That move resulted in new timing issues to keep networks in sync.

5G technology has changed the telecom industry, bringing higher data speeds, lower latency, and enhanced connectivity. The deployment of 5G networks, however, comes with significant financial investments, particularly in spectrum licenses. The major mobile operators in the United States have collectively spent nearly $100 billion on 5G mid-band spectrum.

Efficient spectrum utilization is crucial to maximizing the return on this investment. Time and phase synchronization plays a critical role in optimizing 5G spectrum utilization, focusing on time Division Duplex (TDD), Carrier Aggregate (CA), and the synchronization strategies supported by the O-RAN Alliance.

The Importance of Synchronization in 5G Networks

In previous generations of mobile networks, synchronization was essential for facilitating call handovers between base stations as users moved from one cell to another. In 5G, synchronization plays an even more significant role by enabling the high capacity and low latency that users expect. For instance, while a 3 Gb movie might take about 30 minutes to download on a 4G network, the same movie can be downloaded in just 35 seconds on a 5G network.

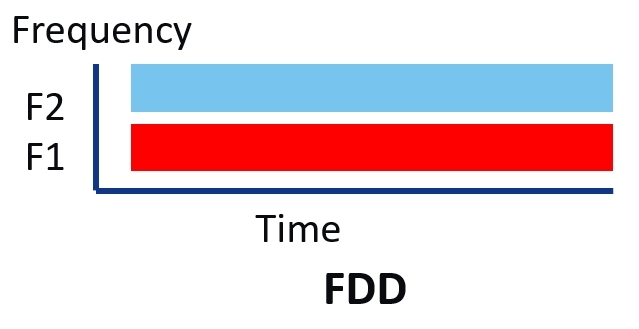

A 4G network primarily operates in Frequency-Division Duplex (FDD) mode, which uses paired frequency bands to enable simultaneous transmission for uplink and downlink separated by a guard band. FDD, However, requires a fixed, symmetric ratio of 50/50 uplink/downlink and downlink/uplink, which is sub-optimal in terms of spectrum utilization (Figure 1).

Time-Division Duplex (TDD) in 5G

A 5G network introduces a Time-Division Duplex (TDD) mode to offer more flexibility and increase capacity. TDD requires only one band for uplink and downlink, with a small guard period (time gap) when switching from downlink to uplink transmission. The transmission is multiplexed in time slots that can be dynamically allocated for uplink and downlink, adapting to traffic characteristics.

TDD is more efficient than FDD because it enables dynamic allocation of time slots based on traffic demand, making it possible to optimize spectrum utilization (Figure 2). Additionally, TDD is less expensive because it does not require a diplexer (filter) to isolate transmission and reception. TDD is also better suited for 5G critical features such as massive Multiple Input Multiple Output (mMIMO), beamforming, and pre-coding techniques that enhance spectral efficiency and reliable coverage.

Synchronization in TDD

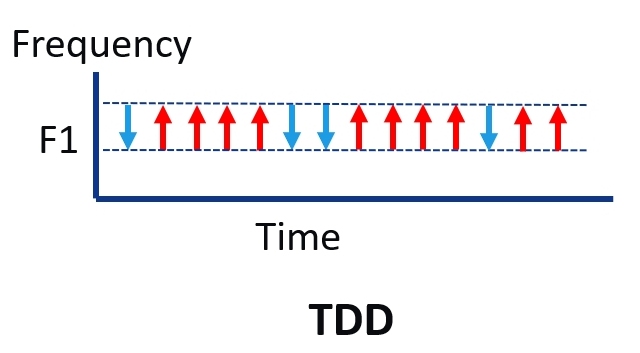

Time and phase synchronization are essential for TDD to avoid cross-slot interference between downlink and uplink transmissions. Base stations (remote units in 5G) must use the same downlink and uplink time slot assignment reference. Without synchronization, interference can be mitigated to some extent, but this requires stricter RF emission requirements, isolation distance, and a larger guard band, all of which reduce spectrum efficiency and increase operational costs (Figure 3).

The GSM Association (GSMA), a nonprofit industry organization representing the interest of mobile network operators worldwide, recommends that all TDD networks, whether LTE or 5G NR, operate in the same frequency range and within the same area to be synchronized. It also urges regulators to prioritize TDD synchronization to address interference issues among national and international networks.

Carrier aggregation in 5G

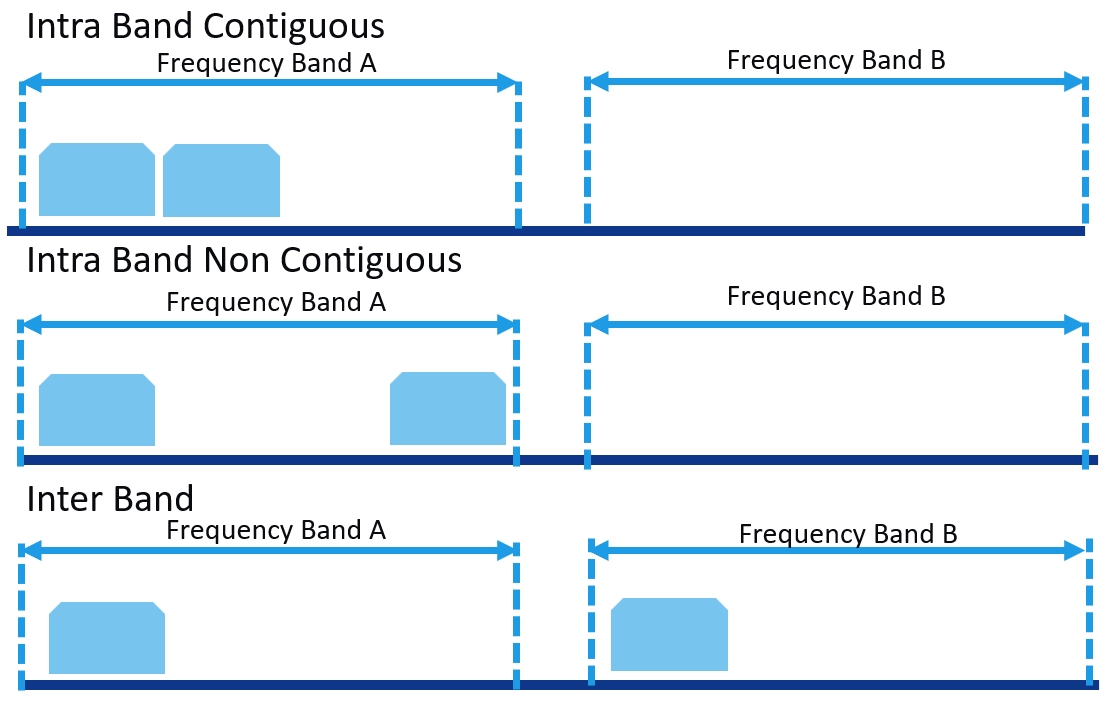

CA is another core feature of 5G that maximizes the use of the available spectrum. CA combines several channels in the same FDD and TDD frequency band or between different frequency bands to improve capacity and achieve higher data rates. This technology is crucial for extending coverage, reducing the number of deployed cell sites, and lowering deployment costs.

5G operates in two frequency ranges: FR1 (sub 6 GHz) and FR2 (mmWave, 24.25 GHz to 52.6 GHz). FR1 further divides into the low band (sub-1 GHz) and mid-band (1 GHz to 6 GHz). Low-frequency ranges provide excellent coverage due to their long wavelengths propagating deeper indoors. These ranges are typically deployed in FDD mode, which has a higher uplink signal strength than TDD mode. High-frequency ranges deliver the largest capacity and lowest latency in TDD mode but are limited by poorer uplink signal strength than mid- and low-frequency ranges. The mid-frequency ranges in TDD mode offer the best balance between coverage and capacity (Table 1).

| Low-frequency band (FR1)-FDD |

Mid-frequency band (e.g., C-Band) (FR1)-FDD |

High-frequency band, mmWave-TDD |

|

| Coverage | *** | ** | * |

| Bandwidth | * | ** | *** |

Figure 4 shows that CA requires networks to have specific bandwidth levels for time and phase synchronization. For instance, FR2 intra-band requires a maximum Time Alignment Error (TAE) of 130 ns, while FR1 intra-band contiguous and FR2 intra-band non-contiguous require a maximum TAE of 260 ns.

Synchronization Open RAN 5G networks

The O-RAN Alliance has emerged as a significant standard body impacting the 5G network architecture definition. Established in 2018, the O-RAN Alliance aims to disaggregate the Radio Access Network (RAN) with open and standardized interfaces, following the trend started in data centers. This approach fosters innovation and reduces deployment costs by allowing mobile operators to mix and match interoperable components from different vendors.

Figure 4. Carrier aggregation lets data travel in both intra-band and inter-band combinations, improving spectrum use.

Legacy cell sites consist of a Remote Radio Head (RRH), GNSS antenna, and a Baseband Unit (BBU), all deployed on cell sites. In contrast, the 5G network architecture has been disaggregated into Radio Units (RUs), Distributed Units (DUs), and Centralized Units (CUs). Centralizing and sharing BBUs as DUs and CUs result in significant operational expenditure (OpEx) savings in terms of power and cooling. Cell sites consume approximately 70% of the RAN’s power energy. Sharing BBU processors in a centralized location also offers significant capital expenditure (CapEx) savings, as they were previously used only at a fraction of their full capacity on cell sites. The RU, DU, and CU software can be virtualized, containerized, and deployed on cheaper general-purpose hardware (Figure 5).

In previous generations of mobile networks, it was common to deploy a GNSS Primary Reference Time Clock (PRTC) locally on each cell site. Any change, upgrade, or maintenance had to be performed on each cell site. Additionally, most GNSS antenna receivers are accurate within a range of ±100 ns, making key features such as CA challenging to deploy.

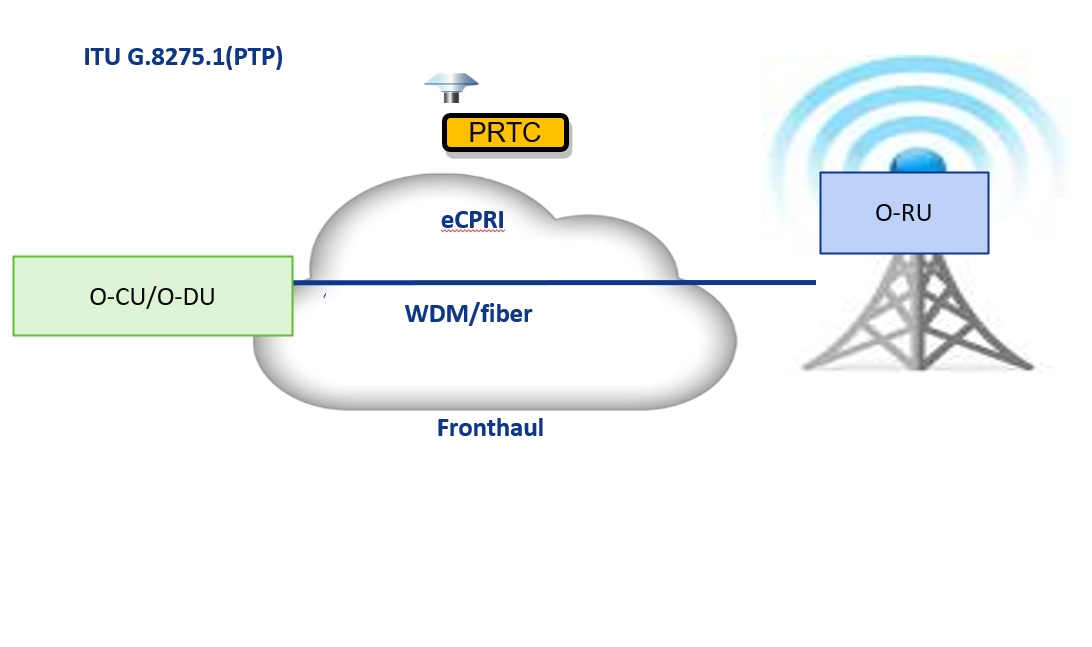

For 5G, the O-RAN Alliance advocates for network timing distribution as the preferred synchronization strategy, using the open and standardized ITU-T G.8275.1 and ITU-T G.8275.2 protocols. The ITU-T G.8275.1 PTP Telecom profile is deployed in the fronthaul network from a Primary Reference Time Clock Telecom Grandmaster (PRTC/T-GM) that synchronizes switches equipped with boundary clocks, DUs, and RUs.

Deploying a PRTC/T-GM in the fronthaul network aligns with the 5G disaggregated RAN architecture and centralizes timing functions in one location, where they can be shared, pooled, maintained, and upgraded flexibly and economically. This approach eliminates the need to deploy a local PRTC/T-GM at each cell site and avoids the need for more expensive oscillators on cell sites.

Microchip has conducted extensive synchronization, interoperability, and performance tests with a wide range of vendors in the mobile network ecosystem. These tests, conducted during a multi-vendor interoperability showcase run by EANTC and IEEE ISPCS “plugfests,” ensure that Microchip’s products meet the highest performance and reliability standards.

Conclusion

Time and phase synchronization are critical functions in 5G networks, enabling technologies such as TDD and CA to optimize spectrum utilization and reduce deployment costs. The O-RAN Alliance’s vision of a disaggregated RAN with open and standardized interfaces further emphasizes the importance of synchronization in modern network architectures.

By implementing robust synchronization strategies and leveraging advanced timing solutions, mobile operators can ensure the efficient utilization of the 5G spectrum, ultimately enhancing network performance and reducing operational costs.

Related content

See EE World’s collection of timing articles for more on 5G network timing.