EE World discussed with GlobalFoundries’ Anthony Yu trends and tradeoffs in co-packaged optics and silicon photonics resulting from the rising data demand that AI thrusts upon us. It’s coming down to speed versus reliability, at least for now.

The demand for data always increases. AI is disrupting what was a continuum in the way optical and electrical signals increase speed in data centers and networks. At OFC 2024, the feeling was that a step increase in data consumption would exert pressure on engineers to increase data rates even faster. Indeed, one forecast predicts that data center investments will grow at a 24% CAGR by 2028. This pressure pushes semiconductor manufacturers, but how and what’s the reliability hook?

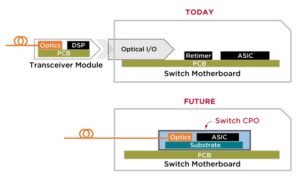

Datacenters currently use pluggable optical modules to connect servers and network switches. The modules, mounted on the face plates of blades, are easy to replace should one fail or an optical connection break. The problem is that as the electrical signals reach 224 Gb/sec per lane, they lose signal integrity because of the long connection from a blade’s faceplate to a switch ASIC on a board. PCB traces won’t work anymore. To mitigate these issues at lower speeds, the industry switched to cable from the optical transceiver to a point close to the ASIC. That helped, but the copper links are still too long.

A movement is in place to move the optical transceiver into the ASIC package. This technology, called co-packaged optics, integrates the optical engine and switches silicon onto the same substrate without requiring signals to traverse the PCB. Co-packaged optics use silicon photonics, which moves light on a device, further shortening the distance that electrical signals must travel.

Unfortunately, moving the optical components inside the ASIC package means trouble if something fails. ASICs are too expensive to replace because of an optical failure.

EE World spoke with Anthony Yu of GlobalFoundries following OFC 2024. Yu explained how silicon photonics and co-packaged optics combine to improve data rates and what the industry is doing to alleviate the issue of what to do should a failure occur. It’s a tradeoff and a work in progress.

EE World: Anthony Yu of GlobalFoundries. Thank you for speaking to EE World. We’re going to talk about silicon photonics and co-packaged optics. You and I spoke offline in 2023, but things have changed. I heard people talk of the coming data tsunami this year at OFC. How will we move the bits quickly and reliably enough to keep up? People from the fiber optics and big network industries want this from the chips your company manufactures. They work continually to improve speed, usually in increments of two. That’s what’s been going on for years. Now, everybody’s a little worried, from the network to the chip level. What’s happening, and what will it take to move those bits fast enough?

Yu: You’re right. A year ago, we first spoke about photonics. But the world has certainly changed since our last discussion. I was also at OFC, completely immersed in customer meetings and with the data center people. I picked up a couple of themes, coloring the world I lead today: the foundry for silicon photonics. The use case for silicon photonics for optical interconnects highlights the discussion you just brought up, which is where my attention has been focused over the last year.

At OFC, we saw smaller nodes attacked by Nvidia and AMD talking about the 3-nm GPUs. Shortly after OFC, Nvidia announced the Blackwell GPU for AI. What connects it all at OFC was an anticipation of more powerful GPUs for these large-language models (LLMs) such as GPT3 and GPT4. The influx of data you just cited is magnified by the number of parameters required for these LLMs. They said that at the time at OFC, GPT4 would be upwards of 1.8 trillion parameters in that LLM. To accomplish that, you need to have all the GPUs within the data centers for training, which act as one giant chip driving the need for low latency and optical interconnect. The discussion was, “How do we do that?” We can’t do that using just conventional pluggable transceivers. We need to use co-packaged optics.

Last year, we spoke about seeing an uptick in pluggable transceivers, which go in the faceplate of servers, to connect these banks of servers. Now, we’re talking about co-packaged optics. The GPUs within the servers can be connected through a compute fabric. I would say the most significant difference since 2023 is now we have this concept of compute fabric, which is ushering in the need for co-packaged optics. I’m sure you heard that at OFC. “When will it happen?”

EE World: What do you mean by co-packaged optics?

Yu: As opposed to having a pluggable transceiver on the front of the faceplate connected by a copper connection to the ASIC, co-packaged optics move the optics onto the same package as the ASIC, resulting in a very short distance for signals to travel.

EE World: So that means the fiber optic cable coming into a switch or a blade of a switch, instead of ending at the module, will go into the blade, connect it, and then make its connection. It is much closer to the GPU. The connection would be optical at that point, going all the way into the GPU because there would be optics to receive, and the optics to send would be within the same package of the GPU. Is that correct?

Yu: That’s correct. If you think about it, you can imagine that if you open one of these, you’ll see many connections within the box. Part of the challenges of co-packaged optics is to pack as much density of transmit and receive within a given millimeter. What you described is exactly what’s leading to a couple of metrics. One is energy efficiency, picojoules per bit. Next is density. How much bandwidth can you pack into a square millimeter?

EE World: One of the reasons we have these pluggable optics is that cables break, and things can fail. You can easily swap a module if there’s a connection break at the switch. When you put the optics right on the chip, what happens if there’s a failure? You can’t just replace the entire module and all the connections that go into that blade. What happens? It seems to me that the reliability must go way up.

Yu: You’re addressing one of the problems with co-packaged optics. At OFC, Broadcom announced that they have a 51.2 Tb/sec switch with co-packaged optics that is commercially available. More co-packaged optics will enter the data center within the next two years.

One of the things that we’re working to address mechanically is detachability. Today, if you’ve got a bad connection, you can pop out the pluggable transceiver and faceplate and replace it with a new one or fix the connection. For co-packaged optics, one of the things that they’re working on with packaging providers is a detachable connection to the chip. That provides a way to mechanically replace the connection of the fibers to the chip without disturbing the ASIC itself. To make this happen, we need extremely high reliability. That’s what we’re all working towards. Optics still need to be field-replaceable without disturbing the ASIC.

EE World: So now you’ll have many optical fibers coming to the GPU. Is each fiber going to have its connection or path, or will it be somehow bundled together in an optical connector? Again, what happens if there’s a failure? If you’ve got cables and one fails, can you pull one out and replace it? Are there any alignment problems that come into play?

Yu: That’s addressed at a chip level in a couple of ways. At GlobalFoundries, we have passive fiber attachments, which means you do not have to mechanically align each fiber to its respective waveguides or converters, to be more precise. An ASIC could have as many as I don’t know, six, eight, or 12 optical interconnect chips surrounding it. There’s not going to be just one interconnect of an ASIC with gobs and gobs and gobs of fiber; there’s going to be, you know, depending on the discrete amount of bandwidth required, the number of these chips around them, each one of those chips, Martin will have a fiber array, so there won’t be an individual fiber attach as many as 40 or 60 times to an optical interconnect chip. There’s an array, and those arrays would be mechanically connected to the optical interconnect chip, and that’s what would be replaceable. In the instance you describe, if there’s a bad connection there for want, even despite our passive fiber attached alignment scheme, you can replace the fiber block while keeping the interconnect chip and the ASIC intact. The array itself or the fiber block would be replaceable for want of a lousy connection.

At a chip level, we make sure that as the array is attached to the photonics chip, every one of those connections is of low loss as it comes in the waveguide and then to the chip.

EE World: You’ve got fiber, and the light from this fiber is connected to the package. We’re not going electrical at that point. Are we going electrical once the light is inside the package, or are we also continuing to use what’s called silicon photonics inside the GPU or the ASIC?

Yu: At GlobalFoundries, we use our GF Fotonix process. The ASIC takes the incoming electrical signals, usually streaming in through SerDes at 224 Gb/sec, and converts the bits onto photons through various modulation schemes. Once it’s converted to photons, the data moves around the chip, sending hundreds or thousands of miles down on the fiber.

Inversely, light from fiber comes into the chip as photons are converted into the germanium photodetector and then converted back into electrical signals using a transimpedance amplifier. The ASIC then processes that electrical signal. The transceiver chip, which is what we build using silicon photonics, does both receive and transmit.

EE World: What about packaging? We hear 2D packaging, 2.5D, and 3D. How does silicon photonics play into that, and where on that scale does it fit?

Yu: There’s been a migration. We started with pluggable transceivers of the faceplate, some distance away from the ASICs, to where it is today, which is what we have here at GlobalFoundries. Our customers are building 2.5D heterogeneous, integrated, co-packaged devices using chips connected to the package through fine-pitch copper pillars. I think it’ll eventually move to 3D, or you’ll be able to power the chips through through silicon vias. Using the number system, I’d say today, we’re at 2.5D. Within the next five to ten years, you’ll see everything move to 3D. That configuration will be prevalent for co-packaged optics.

EE World: Now, as you mentioned, 224 Gb/sec per lane. At OFC, everybody was talking about 800G. So that would be four lanes in one fiber. Some people are talking 1.6T. I even saw one slide where somebody was trying to come up with a timeline for 6.4T. As these optical standards bring higher data rates, do silicon photonics have to adapt to them, or can silicon photonics already handle the data rates that aren’t yet on fiber?

Yu: At GlobalFoundries, we present our clients with the ability to use circuit topologies to support what you described. We call it optical scale out. The advantages of fiber optics and silicon photonics, as opposed to copper, are that they can put multiple wavelengths on a single fiber. You can’t do that with copper; you couldn’t send the parallel signals down the same wire because of intersymbol interference.

From a photonic standpoint, we have enabled our Process Design Kit (PDK) with coarse-wave division multiplexing (CWDM) type filters, allowing our clients to put two or maybe four different wavelengths on a single fiber to achieve the bandwidth densities you cited. Today, one customer is already doing eight wavelengths per fiber. I think we enable that through our microring resonators to create what’s called DWDM, dense wavelength division multiplexing type of structures. We in the silicon world have to create the right types of structures rather than structures that support that optical scale out, and that’s on us to be able to produce the precision needed for those very delicate optical structures. So that’s something we’re doing directly in silicon photonics.

EE World: What structures other than waveguides move the light to where you need to get it? What happens when you reach the end of that waveguide?

Yu: If I were to try to list every type of structure, we wouldn’t have enough time to go through them all. You can divide the base structures into active and passive components. Active components include the modulators. We have different types of modulators. There’s a Mach-Zehnder modulator; there’s a microring resonator; there are even things like semiconductor-insulator-semiconductor capacitor (SISCAP) Mach-Zehnder modulators, anything that varies the refractive index of the material, taken advantage of electro-optic material, that’s one example of an active component.

Another active component would be the detector — the ability to capture and convert photons from the fiber to pulses. We have different waveguides, which are used for low loss to move photons from one point of the chip to the next without losing signal. We have a variety of geometric waveguides. Then, you also have to manipulate the polarization. You need to steer the beam and manipulate it many times. Other passives include splitters, which allow you to split the signal into two, sometimes four, legs. There are different types of structures, but they all require patterning to create unique geometric structures, lots of circles, and curves. You can’t have sharp bends because light doesn’t like that, and you tend to lose the signal. Designers have all types of different geometries to assemble when building a transceiver.

EE World: Anthony, thank you so much for your time. And thank you for the update on silicon photonics and CO-packaged optics.

Yu: It’s been a pleasure, Martin; thank you very much. Take care.